44

Rapid Prototyping a GPT-3 App with Flask and PropelAuth

Today we are going to build a GPT-3 powered front-line support bot. Teams can sign up and program the bot to answer a set of questions with fixed responses. If the bot doesn't recognize the question, it will say "A human will be with you shortly".

Where GPT-3 comes in, is the ability to interpret a question like "Are you open on sundays?" and realize it's the same as "What are your open hours?" Let's start with a demo of GPT-3.

To experiment, we can use OpenAI's playground. The way the playground works is you configure GPT-3, provide a prompt, and it will complete your response. An easy example from their list of examples uses the prompt:

Original: She no went to the market.

Standard American English:which GPT-3 completes with

She didn't go to the market.Pretty cool! Now let's get fancier.

The config we'll use is to copy the "Chat" example, but turn the temperature down to 0, as we don't want a lot of randomness here. Let's start our prompt with essentially the description we used above.

The following are conversations with a chat AI.

The chat AI is programmed to give fixed responses to common questions.

If the chat AI is ever presented with a question not in it's list

of known questions, it responds with "A human will be in touch shortly".Then we add in the pre-programmed questions and responses.

| Question | Response |

| What are your hours? | Our hours are Monday-Friday, 9am-5pm. |

| Are you currently hiring? | We're hiring for cashiers |

| What do you sell? | We sell clothing and accessories at affordable prices.

You can see all our products at https://www.example.com |And finally, we give it some examples to learn from.

###

Human: What are your hours?

AI: Our hours are Monday-Friday, 9am-5pm.

###

Human: What do you have in store?

AI: We sell clothing and accessories at affordable prices. You can see all our products at https://www.example.com

###

Human: what's the deal here

AI: A human will be in touch shortly

###

Human: Are you open monday?

AI: Our hours are Monday-Friday, 9am-5pm.

###

Human: Where are you located?

AI: A human will be in touch shortlyWe should note that this is really not a lot of training data. Any ML model will do better the more examples you give it, so the more examples you provide the better.

Let's finish our prompt with an example question and see how GPT-3 responds. We'll use an exact copy of one of the questions as a base case to make sure GPT-3 understands the prompt.

###

Human: Are you currently hiring?GPT-3 responded with:

AI: We're hiring for cashiersThis might not seem impressive, but we've basically programmed a primitive chat bot with nothing but text. Let's start looking at more difficult prompts.

In each of these examples, GPT-3 was given only the

Human: ... text, and it responded with the AI: ... text.Human: do you have shoes?

AI: We sell clothing and accessories at affordable prices. You can see all our products at https://www.example.com

Human: what can I buy?

AI: We sell clothing and accessories at affordable prices. You can see all our products at https://www.example.com

Human: can i buy dog food here

AI: A human will be in touch shortlyThe above examples are pretty good. In the third question, it isn't sure so it ends up giving the stock response. What happens if we give it extra filler text?

Human: I'm running really late, are you open on sundays?

AI: Our hours are Monday-Friday, 9am-5pm.

Human: One time I bought a product from a rival store.

AI: We're hiring for cashiersThe first example is perfect, but the second one is confusing. The prompt isn't so much a question as a statement. This is what happens if you don't provide enough training data and forget edge cases like weird random statements.

Human: when's closing time?

AI: Our hours are Monday-Friday, 9am-5pm.

Human: what happens if I show up at 4:59pm???

AI: A human will be in touch shortlyApparently the AI doesn't have time for any sass. You get the idea though. We feed it a prompt, and it provides a useful initial response.

Ultimately, we want our product to be used by different companies which will have different questions. To address this, we'll end up making a really long prompt which looks like this:

The following are conversations with a chat AI. ...

| Question | Response |

...questions and responses...

...Human/AI examples...

#######

The following are conversations with a chat AI. ...

| Question | Response |

...questions and responses...

...Human/AI examples...

########

...and so on...The idea is that by the end of our long prompt, the model should understand the structure of what it's being asked to do. When we give it new questions and responses, it shouldn't require as much data. Note that, to avoid specifying this prompt every single time, you can see this document on fine tuning.

Now that we know the inputs to GPT-3, we can turn it into an API with Flask.

To get started, we'll create a Python virtual environment and install our dependencies. We're using Flask as a webserver, SQLAlchemy to talk to our DB, OpenAI to interact with GPT-3, and propelauth-flask for authentication.

$ mkdir backend

$ python3 -m venv venv

$ source venv/bin/activate

(venv) $ pip install -U flask openai Flask-SQLAlchemy propelauth-flaskOne of the reasons I like Flask is how easy it is to build out an API. We can make a simple wrapper around

openai's completion API like so:from flask import Flask, request

import os

import openai

app = Flask(__name__)

openai.api_key = os.getenv("OPENAI_API_KEY")

@app.route("/test_prompt", methods=["POST"])

def test_prompt():

prompt = request.get_json().get("prompt")

return complete_prompt(prompt)

def complete_prompt(prompt):

response = openai.Completion.create(

engine="davinci-instruct-beta-v3",

prompt=prompt,

temperature=0,

max_tokens=60,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0,

stop=["\n"]

)

# Get and return just the text response

return response["choices"][0]["text"]Let's test our API by running the Flask server with

flask run and then hitting it with curl:$ curl -X POST

-d '{"prompt": "Original: She no went to the market.\nStandard American English:"}'

-H "Content-Type: application/json"

localhost:5000/test_prompt

She didn't go to the market.We need a durable place to store questions/answers ahead of time. Since the questions and answers will be tied to a company and not to an individual, we'll need an identifier for companies. We'll use

org_id (short for organization identifier) and then all we need to do is store questions/answers for each org_id.You can use pretty much any database for this task (Postgres, MySQL, Mongo, Dynamo, SQLite, etc.). We'll use SQLite locally since there's nothing to deploy or manage, and we'll use SQLAlchemy as an ORM which will allow our code to also work with other databases later.

We've already installed the libraries, now let's define our table in a new file

models.py:from flask_sqlalchemy import SQLAlchemy

db = SQLAlchemy()

class QuestionsAndAnswers(db.Model):

# We'll see later on that these IDs are issued from PropelAuth

org_id = db.Column(db.String(), primary_key=True, nullable=False)

# Simplest way to store it is just as JSON,

# sqlite doesn't natively have a JSON column so we'll use string

questions_and_answers = db.Column(db.String(), nullable=False)and hook it up to our application in

app.py:from models import db

app = Flask(__name__)

app.config['SQLALCHEMY_DATABASE_URI'] = 'sqlite:///local.db'

app.config['SQLALCHEMY_TRACK_MODIFICATIONS'] = False

db.init_app(app)Finally, let's use the command line to create the table:

(venv) $ python

# ...

>>> from app import app, db

>>> with app.app_context():

... db.create_all()

...

>>>Our database is fully set up. Next we can create some routes for reading/writing questions and answers.

We need two routes - one for creating questions and answers and one for reading them. These routes are just simple functions that take the input and save/fetch from our database. We'll take in the

org_id as a path parameter and won't worry about verifying it just yet. We'll revisit it at the end when we add authentication. For now, anyone can send a request on behalf of any organization.A small warning: you will want to decide on what validation to do. We are passing these strings directly into our GPT-3 prompt, so you may want to filter out things like newline characters which can mess up our prompt.

@app.route("/org/<org_id>/questions_and_answers", methods=["POST"])

def update_questions_and_answers(org_id):

# TODO: validate this input

questions_and_answers = request.get_json()["questions_and_answers"]

existing_record = QuestionsAndAnswers.query.get(org_id)

if existing_record:

existing_record.questions_and_answers = json.dumps(questions_and_answers)

else:

db.session.add(QuestionsAndAnswers(org_id=org_id, questions_and_answers=json.dumps(questions_and_answers)))

db.session.commit()

return "Ok"

@app.route("/org/<org_id>/questions_and_answers", methods=["GET"])

def fetch_questions_and_answers(org_id):

questions_and_answers = QuestionsAndAnswers.query.get_or_404(org_id)

return json.loads(questions_and_answers.questions_and_answers)And let's quickly test our new endpoints:

$ curl -X POST

-d '{"questions_and_answers": [{"question": "What is your phone number for support?", "answer": "Our support number is 555-5555, open any time between 9am-5pm"}]}'

-H "Content-Type: application/json"

localhost:5000/org/5/questions_and_answers

Ok$ curl localhost:5000/org/5/questions_and_answers

{"questions_and_answers":[{"answer":"Our support number is 555-5555, open any time between 9am-5pm","question":"What is your phone number for support?"}]}

$ curl -v localhost:5000/org/3/questions_and_answers

# ...

< HTTP/1.0 404 NOT FOUND

# ...Great! We can set and fetch data for any organization.

We can go back and update our

test_prompt function to:@app.route("/org/<org_id>/test_prompt", methods=["POST"])

def test_prompt(org_id):

# TODO: validate this prompt

user_specified_prompt = request.get_json().get("prompt")

db_row = QuestionsAndAnswers.query.get_or_404(org_id)

q_and_a = json.loads(db_row.questions_and_answers)["questions_and_answers"]

full_prompt = generate_full_prompt(q_and_a, user_specified_prompt)

return complete_prompt(full_prompt)And finally, our

generate_full_prompt function:def generate_full_prompt(q_and_as, user_specified_prompt):

# Generate the table using our data

q_and_a_table = "\n".join(map(

lambda q_and_a: f"| {q_and_a['question']} | {q_and_a['answer']} |",

q_and_as

))

# Generate positive examples using the exact question + answers

q_and_a_examples = "###\n\n".join(map(

lambda q_and_a: f"Human: {q_and_a['question']}\nAI: {q_and_a['answer']}",

q_and_as

))

# Generate some negative examples too

random_negative_examples = """###

Human: help me

AI: A human will be in touch shortly

###

Human: I am so angry right now

AI: A human will be in touch shortly

"""

# Note: if you aren't using a fine-tuned model, your whole prompt needs to be here

return f"""The following are conversations with a chat AI. The chat AI is programmed to give fixed responses to common questions. If the chat AI is ever presented with a question not in it's list of known questions, it responds with "A human will be in touch shortly".

| Question | Response |

{q_and_a_table}

###

{q_and_a_examples}

{random_negative_examples}

###

Human: {user_specified_prompt}

AI: """You can see that in addition to using the same formatting as before, we also added some easy examples, both positive and negative for the bot to learn from.

Does it work?

$ curl -X POST

-d '{"prompt": "what movies are playing?"}'

-H "Content-Type: application/json"

localhost:5000/org/5/test_prompt

A human will be in touch shortly

$ curl -X POST

-d '{"prompt": "can I get support via phone"}'

-H "Content-Type: application/json"

localhost:5000/org/5/test_prompt

Our support number is 555-5555, open any time between 9am-5pmAwesome, our bot learned our custom questions and responded accordingly!

Our backend is still missing authentication and everyone can set questions and answers for any

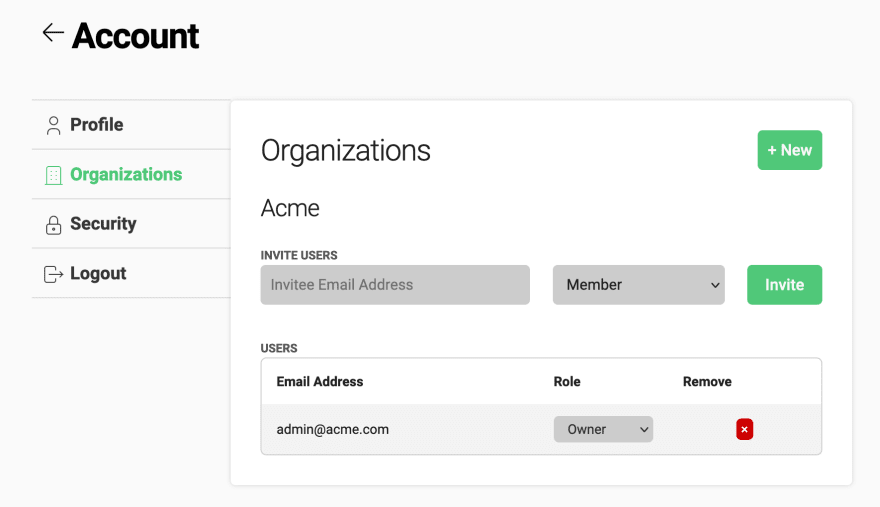

org_id. Next we'll add PropelAuth to address that.Follow the docs to set up and customize our project. The default login page looks like this:

and we can customize it (and every other hosted UI - like our organization management pages) via a simple UI.

Under User Schema, make sure to select B2B Support. This enables your users to create and manage their own organizations.

Following the documentation for Flask, we need to initialize the library like so:

from propelauth_flask import init_auth

auth = init_auth("https://REPLACE_ME.propelauthtest.com", "YOUR_API_KEY")and then we can protect each of our endpoints with the

@auth.require_org_member decorator:@app.route("/org/<org_id>/questions_and_answers", methods=["GET"])

@auth.require_org_member()

def fetch_questions_and_answers(org_id):

questions_and_answers = QuestionsAndAnswers.query.get_or_404(org_id)

return json.loads(questions_and_answers.questions_and_answers)require_org_member() will do a few things:org_id. This is actually all we need to do on the backend. We add the decorator to each of our routes, and future requests will be rejected if they are either for invalid

org_ids, from user's that aren't in that org, or if they are from non-users.We can test this again with curl, which will fail since we haven't specified any authentication information.

$ curl -v localhost:5000/org/5/questions_and_answers

# ...

< HTTP/1.0 401 UNAUTHORIZED

# ...Our UI needs a few components. First, we need some way for a user to add questions and responses. We'll also want a way for our users to test the bot by submitting questions. Normally, we'd need login pages, account management pages, organization management pages, etc. but all those are already taken care of by PropelAuth.

We'll use Next.js as it provides some nice features on top of React. We'll also use

SWR to query our backend and @propelauth/react for authentication.$ npx create-next-app@latest frontend

$ cd frontend

$ yarn add swr @propelauth/reactI'll leave the details of how the UI should look up to you, and we'll just look at a few important parts of the frontend.

Our users are logging in and creating/joining organizations on our hosted PropelAuth pages.

We need some way to interact with this data as well on our frontend. For that we'll use the @propelauth/react library. We'll initialize the library in

pages/_app.js by wrapping our application with an AuthProvider.import {AuthProvider} from "@propelauth/react";

function MyApp({Component, pageProps}) {

return <AuthProvider authUrl="REPLACE_ME">

<Component {...pageProps} />

</AuthProvider>

}

export default MyAppThe

AuthProvider allows any child component to fetch user information using withAuthInfo. We also have information on which organizations the user is a member of. We can make a drop-down menu which allows users to pick an organization:// Allow users to select an organization

function OrgSelector(props) {

// isLoggedIn and orgHelper are injected automatically from withAuthInfo below

if (!props.isLoggedIn) return <span/>

const orgs = props.orgHelper.getOrgs();

// getSelectedOrg() will infer an intelligent default

// in case they haven't selected one yet

const selectedOrg = props.orgHelper.getSelectedOrg();

const handleChange = (event) => props.orgHelper.selectOrg(event.target.value);

return <select value={selectedOrg.orgId} onChange={handleChange}>

{orgs.map(org => <option value={org.orgId}>{org.orgName}</option>)}

</select>

}

export default withAuthInfo(OrgSelector);In this case we are using

selectOrg which we can later get with getSelectedOrg. We'll need this when fetching from our backend.When we set up our backend, we said our frontend will pass in an access token. That access token is one of the values injected by

withAuthInfo. Here's a function that uses fetch to pass along an access token.async function testPrompt(orgId, accessToken, prompt) {

const response = await fetch(`http://localhost:5000/org/${orgId}/test_prompt`, {

method: "POST",

headers: {

"Content-Type": "application/json",

// accessTokens are passed in the header

"Authorization": `Bearer ${accessToken}`

},

body: JSON.stringify({prompt: prompt})

})

return await response.json()

}And here's an example component that makes an authenticated request to

test_prompt.function TestPrompt(props) {

const [prompt, setPrompt] = useState("");

const [response, setResponse] = useState("");

if (!props.isLoggedIn) return <RedirectToLogin/>

const submit = async (e) => {

e.preventDefault();

const orgId = props.orgHelper.getSelectedOrg().orgId;

const apiResponse = await testPrompt(orgId, props.accessToken, prompt);

setResponse(apiResponse)

}

return <form onSubmit={submit}>

<input type="text" value={prompt} onChange={e => setPrompt(e.target.value)} />

<button>Submit</button>

<pre>Server Response: {response}</pre>

</form>

}

export default withAuthInfo(TestPrompt)And that's really all there is to it. Here's my not-very-pretty version:

With not a lot of data or code, we were able to make a powerful chat bot with B2B support. As we get more data, we can keep improving on our bot.

44