39

Get started with Apache Airflow

Welcome back to another post!!

This article is on Apache Airflow. Before getting into authoring our first workflow lets look into what Apache Airflow actually is.

Airflow is a platform to programmatically author, schedule and monitor workflows.

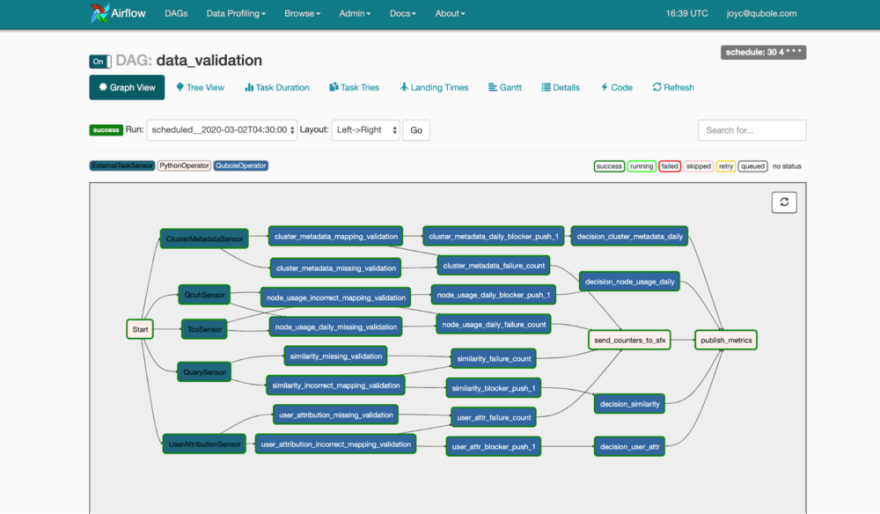

Airflow uses directed acyclic graphs (DAGs) to manage workflow orchestration. Tasks and dependencies are defined in Python and then Airflow manages the scheduling and execution.

Basically its a tool that we can use to orchestrating workflows using code!! Pretty neat right?

In Airflow, a DAG – or a Directed Acyclic Graph – is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies.

Its just a structure that we define using python scripts. To put it simply, it defines how we want to run our tasks.

We can say all workflows are DAGs. A DAG consists of operators, which we refer to as tasks. There are different type of operators available such as

BashOperator, PythonOperator, EmailOperator etc.When we run a DAG all the tasks inside it will run the way we have orchestrated. It can be parallel runs, sequential runs or even runs depending on another task or external resource.

Assuming that Airflow is up and running, lets jump into our first DAG creation. If Airflow is not yet installed in your system please check the documentation for more info. If you have an AWS account you can use MWAA. Its the same thing, the only catch is that its managed by AWS as a service.

Below is a simple code for DAG

from datetime import datetime

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from airflow.operators.python_operator import PythonOperator

args = {

'owner': 'Airflow',

'start_date': '2021-01-01',

'retries': 1,

}

def print_hello():

return 'Hello world from first Airflow DAG!'

dag = DAG(

dag_id='FIRST_DAG',

default_args=args,

schedule_interval='@daily',

catchup=False

)

hello_task = PythonOperator(task_id='hello_task', python_callable=print_hello, dag=dag)

hello_taskSo what this above code basically does is it just invokes the python callable function

print_hello using a PythonOperator. We are assigning that operator to a variable called hello_task. Now this variable is essentially our task. We place this code (DAG) in our AIRFLOW_HOME directory under the

dags folder. We name it hello_world.py.Below is a sample folder structure of DAG

AIRFLOW_HOME/

├── common

│ └── common_functions.py

├── dags

│ └── hello_world.pyYou can modularize the functions you want and store it as python scripts under the folder common and use it inside the DAG.

For running the DAG you can go to Airflow scheduler and turn on the DAG by clicking the toggle button and click on the run button besides your DAG.

Once its successful your task border will turn to dark green color.

That's it!! We just created our first DAG. Airflow is a neat and simple tool that helps us to orchestrate tasks / workflows like this. It doesn't seem that big of a deal with just one task. But in real world we have to orchestrate a large number of workflows. When the scale increases Airflow is such a life saver for all of us.

This was just a small intro on Airflow to those who have never heard of it. For the experienced folks this article won't do much. Its just a byte sized article ✌️

Hope you enjoyed the article. Do not hesitate to share your feedback. I am on Twitter @arunkc97. Give a follow!

39