34

Text preprocessing and email classification using basic Python only

Classifying emails as spam and non spam? Isn't that the "hello world" of Natural Language Processing? Hasn't every other developer worked on it?

Well, yes. But what about writing the codes from scratch without using inbuilt libraries? This blog is for those who have used the inbuilt python libraries but aren't quite sure about what goes on behind them. Find the full code here. After reading this blog, you will gain a better understanding of the entire pipeline. So let's jump right in!

The basic steps in this problem are -

First, read the emails and store them in a list. This has been shown below using the csv reader.

emails = []

with open('emaildataset.csv', 'r') as file:

reader = csv.reader(file)

for row in reader:

emails.append((row[0].strip(), row[1].strip()))We can now move onto the preprocessing stage. The emails are first converted to lowercase and then split into tokens. Then, we apply 3 basic preprocessing processes on the tokens: punctuation removal, stopword removal and stemming. Let us go over these in detail.

Punctuation Removal

This process involves removing all punctuations in a string, which we do using python's string function, replace(). The function below takes a string as input, replaces them with an empty string, and returns a string without punctuations. More punctuations can be added to the list, or a regex of punctuations can be used.

This process involves removing all punctuations in a string, which we do using python's string function, replace(). The function below takes a string as input, replaces them with an empty string, and returns a string without punctuations. More punctuations can be added to the list, or a regex of punctuations can be used.

def punctuation_removal(data_string):

punctuations = [",", ".", "?", "!", "'", "+", "(", ")"]

for punc in punctuations:

data_string = data_string.replace(punc, "")

return data_stringStopword Removal

This process involves removing all the commonly used words that are used to make a sentence grammatically correct, without adding much meaning. The function given below takes a list of tokens as input, parses through it, checks if any of them are in a specified list of stopwords and returns a list of tokens without stopwords. More stopwords can be added to the list.

This process involves removing all the commonly used words that are used to make a sentence grammatically correct, without adding much meaning. The function given below takes a list of tokens as input, parses through it, checks if any of them are in a specified list of stopwords and returns a list of tokens without stopwords. More stopwords can be added to the list.

def stopword_removal(tokens):

stopwords = ['of', 'on', 'i', 'am', 'this', 'is', 'a', 'was']

filtered_tokens = []

for token in tokens:

if token not in stopwords:

filtered_tokens.append(token)

return filtered_tokensStemming

This process is the last step in the preprocessing pipeline. Here, we convert our tokens into their base form. Words like "eating", "ate" and "eaten" get converted to eat. For this, we use the help of python dictionaries, with the key and value pairs defined as the base form token and a list of the word in other forms. Eg, {"eat": ["ate", "eaten", "eating"]}. This helps in normalizing the words in our data/corpus.

This process is the last step in the preprocessing pipeline. Here, we convert our tokens into their base form. Words like "eating", "ate" and "eaten" get converted to eat. For this, we use the help of python dictionaries, with the key and value pairs defined as the base form token and a list of the word in other forms. Eg, {"eat": ["ate", "eaten", "eating"]}. This helps in normalizing the words in our data/corpus.

We parse through each token and check if it is present in the list of words not in their base form. If it is, then the base form of that word is used. This is demonstrated in the function below.

def stemming(filtered_tokens):

root_to_token = {'you have':['youve'],

'select':['selected', 'selection'],

'it is':['its'],

'move':['moving'],

'photo':['photos'],

'success':['successfully', 'successful']

}

base_form_tokens = []

for token in filtered_tokens:

for base_form, token_list in root_to_token.items():

if token in token_list:

base_form_tokens.append(base_form)

else:

base_form_tokens.append(token)

return base_form_tokensNow, using the functions defined above, we form a main preprocessing pipeline, as shown below:

tokens = []

for email in emails:

email = email[0].lower().split()

for word in email:

clean_word = punctuation_removal(word)

tokens.append(clean_word)

tokens = set(tokens)

filtered_tokens = stopword_removal(tokens)

base_form_tokens = stemming(filtered_tokens)After the emails are converted to a list of tokens in their base form, without punctuations and stopwords, we apply the set() function to get the unique words only.

unique_words = []

unique_words = set(base_form_tokens)We define each feature vector to be of the same length as the list of unique words. For each unique word, if it is present in the particular email, a 1 is added to the vector, else a 0 is added. Eg, for the email "Hey, it's betty!" with the list of unique words being ["hello", "hey", "sandwich", "i", "it's", "show"], the feature vector is [0, 1, 0, 0, 1, 0]. Note that "betty" is not present in the list of unique words, thus it is ignored in the final result.

This is demonstrated in this code snippet below where the feature vector is a python dictionary with keys being the unique words and values being 0 or 1 depending on whether the word is present in the email. The label for each email is also stored.

feature_vec = {}

for word in unique_words:

feature_vec[word] = word in base_form_tokens

pair = (feature_vec, email[1]) #email[1] is the label for each email

train_data.append(pair)This way, we generate our training data. The complete pipeline till this stage is given the code snippet below.

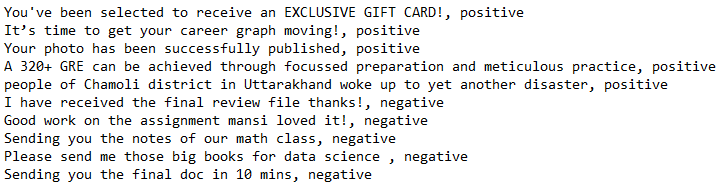

train_data = []

for email in emails:

tokens = []

word_list = email[0].lower().split()

for word in word_list:

clean_word = punctuation_removal(word)

tokens.append(clean_word)

filtered_tokens = stopword_removal(tokens)

base_form_tokens = stemming(filtered_tokens)

feature_vec = {}

for word in unique_words:

feature_vec[word] = word in base_form_tokens

pair = (feature_vec, email[1])

train_data.append(pair)The Naive Bayes Classifier is imported from the nltk module. We can now find feature vectors for any email (say, "test_features") and classify if it is spam or not.

from nltk import NaiveBayesClassifier

classifier = NaiveBayesClassifier.train(train_data)

output = classifier.classify(test_features)The complete pipeline for testing is given below -

def testing(email_str):

tokens = []

word_list = email_str.lower().split()

for word in word_list:

clean_word = punctuation_removal(word)

tokens.append(clean_word)

filtered_tokens = stopword_removal(tokens)

base_form_tokens = stemming(filtered_tokens)

test_features = {}

for word in unique_words:

test_features[word] = word in base_form_tokens

output = classifier.classify(test_features)

return outputWith this, you now know the ins and outs of any basic natural language processing pipeline. Hope this helped!

34