45

Different Ways to Load Data From CSV Files in PostgreSQL

First of all make sure that your user has SUPERUSER privileges.

You can set this using.

You can set this using.

ALTER ROLE <user> WITH SUPERUSER;import psycopg2

import csv

connection = psycopg2.connect(

database="store",

user="postgres",

password="lgwmfpsc",

host="localhost",

port="5432", # default post

)

cursor = connection.cursor()

cursor.execute("DROP TABLE IF EXISTS superstore;")

connection.commit()

create_query = '''CREATE TABLE superstore (

id INT PRIMARY KEY,

ship_model VARCHAR(255) NOT NULL,

segment VARCHAR(255) NOT NULL,

country VARCHAR(255) NOT NULL,

city VARCHAR(255) NOT NULL,

state VARCHAR(255) NOT NULL,

postal_code INT NOT NULL,

region VARCHAR(255) NOT NULL,

category VARCHAR(255) NOT NULL,

subcategory VARCHAR(255) NOT NULL,

sales FLOAT NOT NULL,

quantity INT NOT NULL,

discount FLOAT NOT NULL,

profit FLOAT NOT NULL

)

'''

cursor.execute(create_query)

connection.commit()

with open('./SampleSuperstore.csv') as file:

id = 1

csv_data = csv.reader(file)

next(csv_data)

for row in csv_data:

row = [id] + row

row_tuple = tuple(row)

cursor.execute('''INSERT INTO superstore (id, ship_model, segment, country, city, state, postal_code,

region, category, subcategory, sales, quantity, discount, profit)

VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)''', row_tuple)

id += 1

connection.commit()

cursor.execute("SELECT * FROM superstore LIMIT 10")

print(cursor.fetchall())

connection.close()Before iterating throught the list next is used to skip the first line of the file as they are headers.

import psycopg2

import csv

connection = psycopg2.connect(

user = 'postgres',

password = 'lgwmfpsc',

host = 'localhost',

database = 'store',

port="5432"

)

cursor = connection.cursor()

create_query = '''CREATE TABLE superstore (

id INT(255) NOT NULL AUTO_INCREMENT,

ship_model VARCHAR(255) NOT NULL,

segment VARCHAR(255) NOT NULL,

country VARCHAR(255) NOT NULL,

city VARCHAR(255) NOT NULL,

state VARCHAR(255) NOT NULL,

postal_code INT NOT NULL,

region VARCHAR(255) NOT NULL,

category VARCHAR(255) NOT NULL,

subcategory VARCHAR(255) NOT NULL,

sales FLOAT NOT NULL,

quantity INT NOT NULL,

discount FLOAT NOT NULL,

profit FLOAT NOT NULL,

PRIMARY KEY(id))

'''

cursor.execute("DROP TABLE IF EXISTS superstore")

cursor.execute(create_query)

q = '''LOAD DATA LOCAL INFILE '/home/sapan/codes/Linkedin-Python/database-python/csv-database/SampleSuperstore.csv'

INTO TABLE superstore

FIELDS TERMINATED BY ',' ENCLOSED BY "" (ship_model, segment, country, city, state, postal_code,

region, category, subcategory, sales, quantity, discount, profit);'''

cursor.execute(q)

connection.commit()

cursor.execute("SELECT * FROM superstore LIMIT 10")

print(cursor.fetchall())

connection.close()

# using pandas with sqlalchemy

import pandas as pd

from sqlalchemy import Column, Integer, String, DateTime, Float, Boolean

from sqlalchemy import create_engine, func

from sqlalchemy.orm import sessionmaker, relationship

from sqlalchemy.ext.declarative import declarative_base

engine = create_engine('postgres://postgres:lgwmfpsc@localhost/store')

Base = declarative_base()

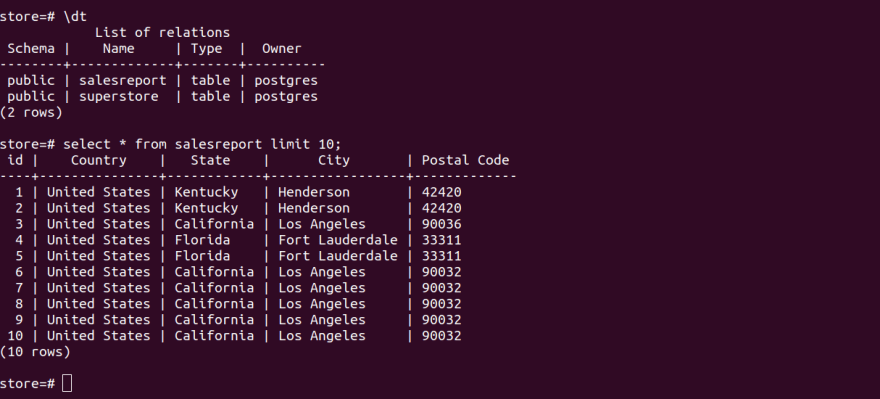

class SalesReport(Base):

__tablename__ = 'salesreport'

id = Column(Integer, primary_key=True)

country = Column("Country", String(length=50))

state = Column("State", String(length=50))

city = Column("City", String(length=50))

postalcode = Column("Postal Code", String(length=50))

def __repr__(self):

return f'''<Salesreport(id='{self.country}', country='{self.country}', state='{self.state}', postal_code='{self.postalcode}')>

'''

Base.metadata.create_all(engine)

file_name = './sample_data.csv'

data_frame = pd.read_csv(file_name)

# # the below function call automatically import the data into our database, so we do not

# # to work with the session here

data_frame.to_sql(

con = engine,

name = SalesReport.__tablename__,

if_exists='append',

index = False

)

# # however if we want to prove the data was imported, we need session here

session = sessionmaker()

session.configure(bind=engine)

s = session()

results = s.query(SalesReport).limit(10).all()

for i, r in enumerate(results):

print(i, r)

print()

overall_max = s.query(func.max(SalesReport.postalcode)).scalar

print(overall_max)

results = s.query(SalesReport).order_by(SalesReport.postalcode.desc()).limit(10)

for row in results:

print(results)

In this post we only see how to populate our data. If you want to view completed details how to use postgreSQL with python refers to this article.

https://sapanravidas.medium.com/postgres-with-python3-ec3cafc5c98d

https://sapanravidas.medium.com/postgres-with-python3-ec3cafc5c98d

45