72

loading...

This website collects cookies to deliver better user experience

Agent 🕵️

Agent can take some action in an environment to have some rewards or penalties.

Reward 🏆

depending upon agent action he will get a reward or a penalty

Environment 🖼️

The place where all happens. Agent does specify work according to the AI by analyzing environment and reward or penalty is given by how good or bad does the agent perform in that environment.

The Algorithm we use is PPO Proximal Policy Optimization

import os

from stable_baselines3 import PPO

from stable_baselines3.common.callbacks import BaseCallbackclass TrainAndLoggingCallback(BaseCallback):

def __init__(self, check_freq, save_path, verbose=1):

super(TrainAndLoggingCallback, self).__init__(verbose)

self.check_freq = check_freq

self.save_path = save_path

def _init_callback(self):

if self.save_path is not None:

os.makedirs(self.save_path, exist_ok=True)

def _on_step(self):

if self.n_calls % self.check_freq == 0:

model_path = os.path.join(self.save_path, 'best_model_{}'.format(self.n_calls))

self.model.save(model_path)

return TrueCHECKPOINT_DIR = './train/'

LOG_DIR = './logs/'callback = TrainAndLoggingCallback(check_freq=10000, save_path=CHECKPOINT_DIR)We just have it as a backup for future reference else we need to re-run the whole training process.

model = PPO('CnnPolicy', env, verbose=1, tensorboard_log=LOG_DIR, learning_rate=0.000001,

n_steps=512)model and set that to PPO which is our model and passing parameters:CnnPolicy- It is like a computer-based brain like a Neural Network in deep learning. A bunch of neurons communicate with each other and learn the relationship between different variables. Then there are various policies for different tasks. We used CnnPolicy because when it comes to image-based problems this model has its upper hand,

env - Our environment which we preprocessed.verbose=1 - This gives us the data when we train the model. like setting it to 0 no output, 1 info, 2 debug.tensorboard_log=LOG_DIR - This helps us to view the metric of how our training is performing as we are running our model.learning_rate=0.000001 - The learning rate, can be a function of the current progress remaining.n_steps=512 - The number of steps to run for each environment per update.The hardest thing in any deep learning or machine learning is Getting the data in the right format.

This one-line code created a temporary AI model.

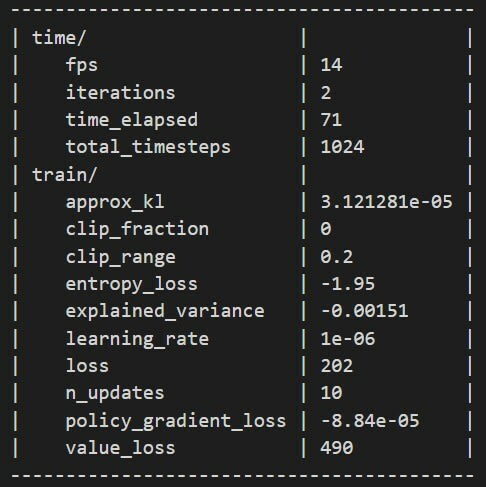

model.learn(total_timesteps=100000, callback=callback)total_timesteps=100000 - The total number of samples (env steps) to train on.callback=callback - called at every step with the state of the algorithm.You will get some details about the current process while running model.learn.

fps : Frame Per Seconditerations : Number of times the process repeated.time_elapsed : How long it been training for.total_timesteps : How many frames our model goes through.entropy_loss (⬇️) : In reinforcement learning, a similar situation can occur if the agent discovers a strategy that results in a reward that is better than it was receiving when it first started, but very far from a strategy that would result in an optimal reward.explained_variance (⬆️) : The explained variance is used to measure the proportion of the variability of the predictions of a machine learning model. Simply put, it is the difference between the expected value and the predicted value.learning_rate (📚): It is a tuning parameter in an optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function.loss (⬇️) : loss is the value of the cost function for our training data.value_loss (⬇️) : val_loss is the value of cost function for our cross-validation datamodel = PPO.load('./train/best_model_1000000')best_model_1000000.state = env.reset()

while True:

action, _ = model.predict(state)

state, reward, done, info = env.step(action)

env.render()