33

Use customized and isolated python environment in Apache Zeppelin notebook

Apache Zeppelin notebook is web-based notebook that enables data-driven, interactive data analytics and collaborative documents with SQL, Scala, Python and more.

For Python developers, using a customized and isolated Python runtime environment is an indispensable requirement. You and your colleagues may want to use different versions of python and python packages and don’t want to affect each others’ environment. In this article, I’d like to introduce to you how to use customized and isolated Python environment in hadoop yarn cluster. Regarding how to achieve this for PySpark, I will leave it to another article. (All the features in this article is done in this jira ZEPPELIN-5330). And you can reproduce all the steps here via downloading this note:

First, let’s create a yaml file which define the python conda env including:

name: python_env

channels:

- conda-forge

- defaults

dependencies:

- python=3.7

- pycodestyle

- numpy

- pandas

- scipy

- grpcio

- protobuf

- pandasql

- ipython

- ipykernel

- jupyter_client

- panel

- pyyaml

- seaborn

- plotnine

- hvplot

- intake

- intake-parquet

- intake-xarray

- altair

- vega_datasets

- pyarrowThen run the using the following commands to create conda env tar and upload it to hdfs.

conda pack -n python_env

hadoop fs -put python_env.tar.gz /tmp

# The python conda tar should be publicly accessible, so need to change permission here.

hadoop fs -chmod 644 /tmp/pyspark_env.tar.gz%python.conf

# set zeppelin.interpreter.launcher to be yarn, so that python interpreter run in yarn container,

# otherwise python interpreter run as local process in the zeppelin server host.

zeppelin.interpreter.launcher yarn

# zeppelin.yarn.dist.archives can be either local file or hdfs file

zeppelin.yarn.dist.archives hdfs:///tmp/python_env.tar.gz#environment

# conda environment name, aka the folder name in the working directory of yarn container

zeppelin.interpreter.conda.env.name environment%python

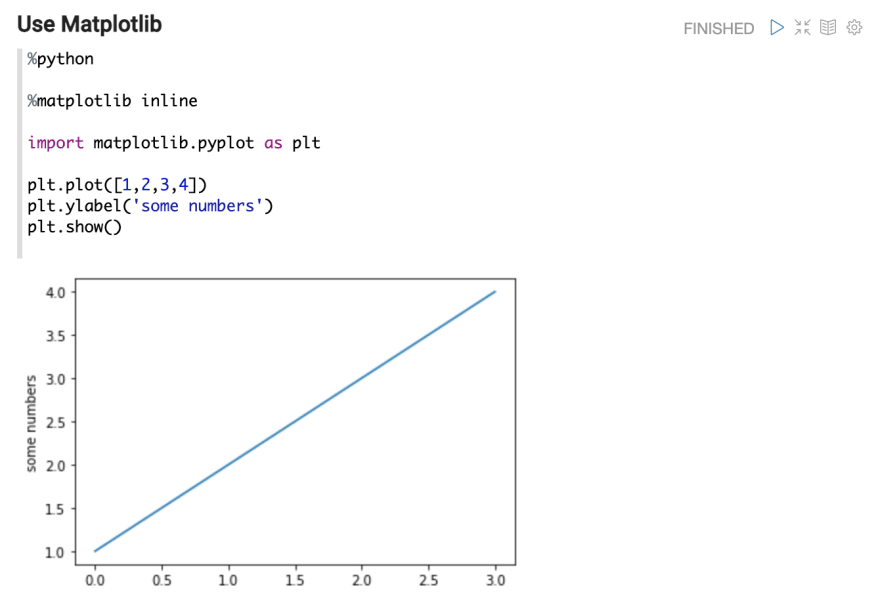

%matplotlib inline

import matplotlib.pyplot as plt

plt.plot([1,2,3,4])

plt.ylabel('some numbers')

plt.show()

The this feature is not released yet when this article is published, you can build Zeppelin master branch by yourself and import this note here to try this feature. If you have any question, you can ask in zeppelin user mail list or slack channel (http://zeppelin.apache.org/community.html)

33