45

How to Open A File in Python Like A Pro

Read my original post in my blog here: How to Open A File in Python Like A Pro

It's a fundamental question. Usually people learn it at the time they get started with python.

And the solution is rather simple.

open()

file = open('./i-am-a-file', 'rb')

for line in f.readlines():

print(line)

f.close()But is that a good solution? What if the file does not existed? It those exceptions and the program ends.

So it's better to always check the existence of the file before reading / writing it.

import os

file_path = './i-am-a-very-large-file'

if(os.path.isfile(file_path)):

f = open(file_path, 'rb')

for line in f.readlines():

print(line)

f.close()Is this solution good enough? What if we have some other complex logics in every line, and it throws exceptions? In that situation,

f.close() will not be called, resulted in the file not closed before the interpreter is closed, which is a bad practice as it might cause unexpected issues (for example, if a non-stop python program is running and it reads a temp file without closing it explicitly, while the OS (such as Windows) protects the temp file as it's being read, this temp file cannot be deleted until this program ends).In this case, a better choice is to use

with to wrap the file operation, so that it automatically close the file no matter the operation succeeds or fails.with

import os

file_path = './i-am-a-very-large-file'

if(os.path.isfile(file_path)):

with open(file_path, 'rb') as f:

for line in f.readlines():

print(line)Is this the perfect solution?

In most cases, yes, it is enough to handle the file operation.

But, what if you need to read a very very very large file, such as a 4GB file? If so, the python program will need read the whole file into the memory before it starts to perform your operation. If this is an API in your server, and several requests come in to read multiple large files, how much memory do you need, 16GB, 32GB, 64GB, just for a simple file operation?

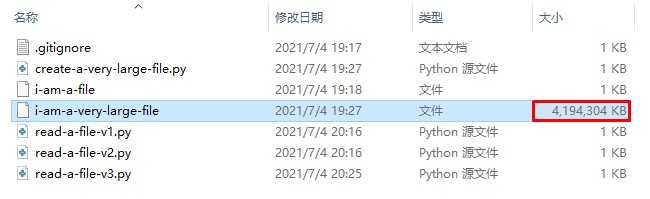

We can do a very simple experiment in Window environment. First, let's create a 4GB with the following script.

import os

size = 1024*1024*1024*4 # 4GB

with open('i-am-a-very-large-file', "wb") as f:

f.write(os.urandom(size))

Now you have a 4GB large file and let's record our current memory statistic using Windows task manager.

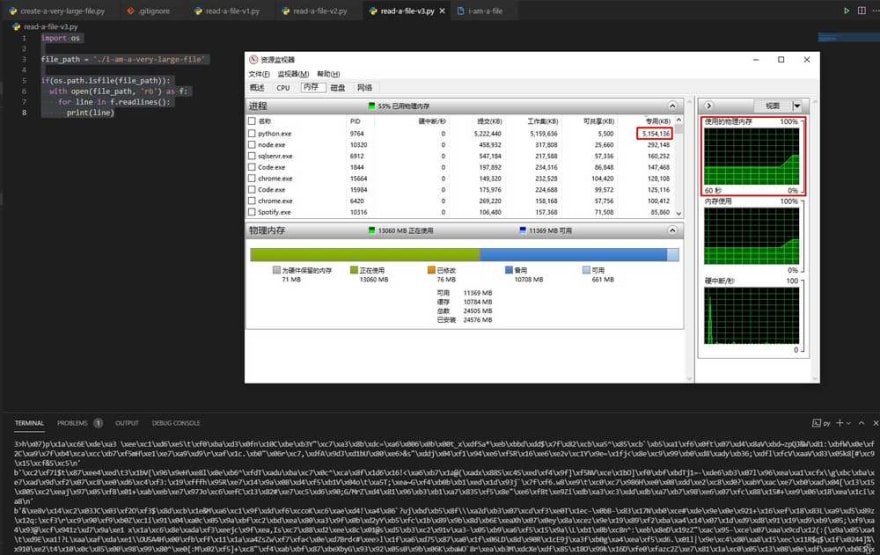

From the screenshot, it shows the process

python uses 5,154,136 KB memories, which is about 5.19 GB memories, just for reading this file only! You can clearly see the steep increasing line from the memory diagram. (FYI, I have a total of 24 GB memory)Hence, to make our solution better, we have to think of a way to optimise it. If only we could read the line while we actually want to use it!

Here comes the concept of generator and we can have the following solution.

yield

import os

def read_file(f_path):

BLOCK_SIZE = 1024

if(os.path.isfile(f_path)):

with open(file_path, 'rb') as f:

while True:

block = f.read(BLOCK_SIZE)

if block:

yield block

else:

return

file_path = './i-am-a-very-large-file'

for line in read_file(file_path):

print(line)And let's run it and monitor the memory change.

Yay! While the console crazily prints the meaningless texts, the memory usage is extremely low compared to the previous version, only 2,928 KB in total! And it's an absolutely flat line in the memory diagram!

Why is it so amazingly fast and memory-safe? The secret is that we use

yield keyword in our solution.To understand how

yield works, we need to know the concept of generator. Here is a very clear and concise explanation about it, check out What does the “yield” keyword do? on StackOverflow.As a quick summary,

yield simply makes this read_file function to be a generator function. When read_file gets called, it runs until yield block, returns the first block of string and stops until the function gets called next time. So, only one block of file gets read each time read_file(file_path) is called. To read the whole file, multiple times of

read_file(file_path) need to call (for line in read_file(file_path)), and each time it only consume a little memory to read one block.So that's how to open a file in python like a pro, given considerations about the extreme cases (actually quite common if your service is performance critical). Hope you enjoy this blog post and share your ideas here!

You can grab the demo source code on GitHub here: ZhiyueYi/how-to-open-a-file-in-python-like-a-pro-demo

Thanks to @Vedran Čačić's comments, I learnt further about better solutions to it.

If we have a very large text file, we can simply use

with open(path) as file:

for line in file: print(line)And it's totally OK.

Or if we really want to process a binary file in chunks (like what I did in 4th Attempt, 1024 bytes per block), we could also use BufferedReader in the built-in io library to achieve the same thing like this

import sys

import io

file_path = './i-am-a-very-large-file'

with open(file_path, 'rb') as f:

BLOCK_SIZE = 1024

fi = io.FileIO(f.fileno())

fb = io.BufferedReader(fi)

while True:

block = fb.read(1024)

if block:

print(block)

else:

breakAnd now I noticed that the method in 4th Attempt is just dumbly re-inventing the wheel (BufferedReader). LOL, knowledge is power.

So what's the lesson learnt besides opening a file?

I think, firstly, do not afraid to share your ideas even if it's not a perfect one (like what I did) and do not afraid to admit your mistakes. Share more and interact more, we can then gain insights from the others and improve ourselves. Cheers~

You can grab the demo source code on GitHub here: ZhiyueYi/how-to-open-a-file-in-python-like-a-pro-demo

45